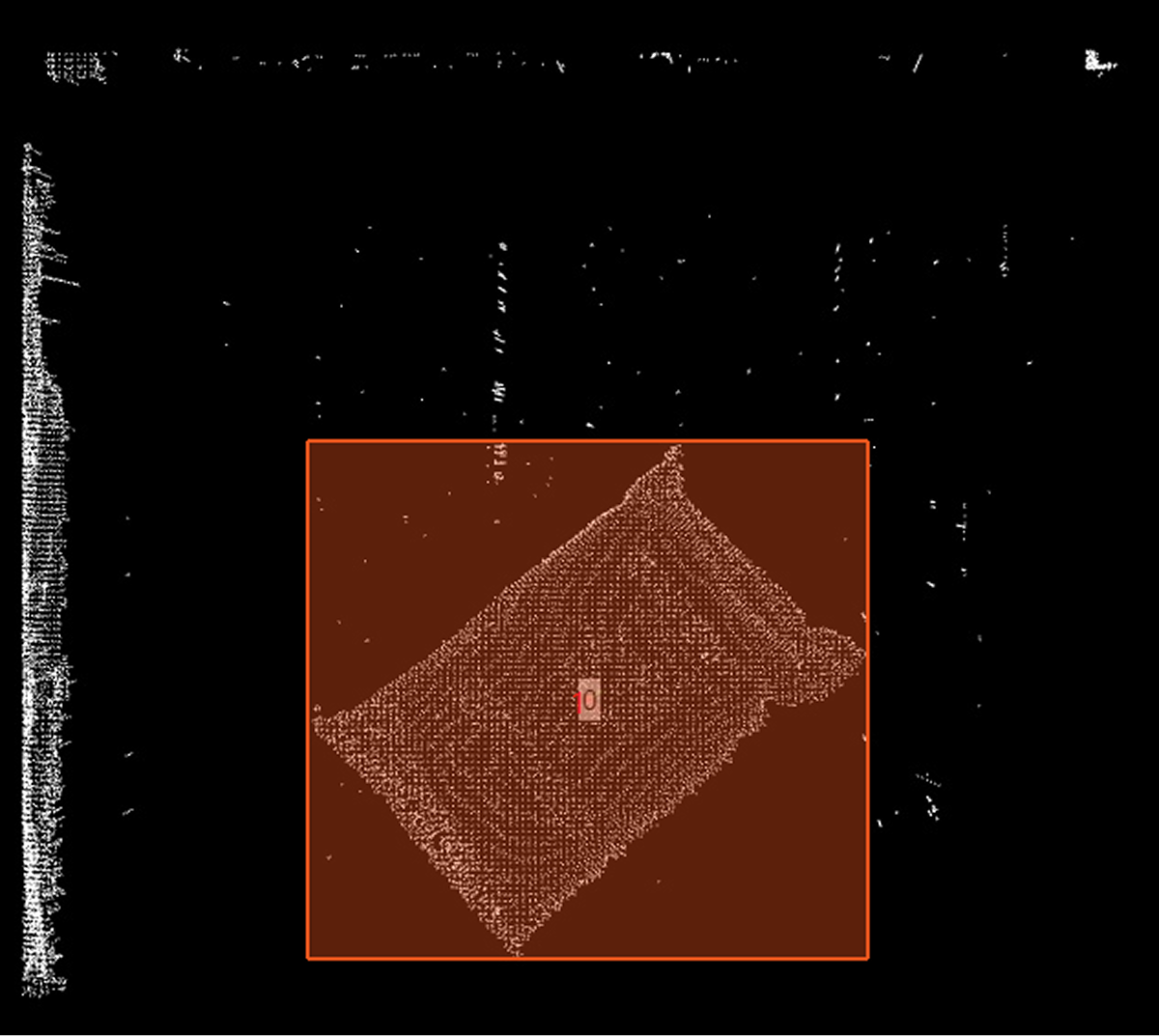

🔷Text Prompt Detection

Function Description

This operator utilizes the Grounding DINO model to achieve open-vocabulary object detection, which means it doesn’t need to pre-train specific categories of objects - as long as they can be described in text, they can potentially be detected. Based on English text prompts (words or sentences) input by users, it detects objects in images that correspond to the text descriptions and outputs their bounding boxes, categories, and confidence scores.

Usage Scenarios

-

Open-vocabulary detection: Detect new category objects not included in the training set, as long as they can be described in English. For example, "the red apple on the table" or "person wearing a hat".

-

Fine-grained detection: Distinguish objects based on more specific descriptions. For example, distinguish between "cat" and "dog", or "sedan" and "truck".

-

Specific attribute detection: Detect objects with specific attributes. For example, "the open door" or "a blue chair".

Input Output

Input |

Image: Color image requiring detection (needs to be in RGB format). Currently only supports single image input. |

|

Output |

Detection Results: List containing detection results, each result represents a detected instance, containing its bounding box (usually horizontal box), confidence score, assigned category ID (based on category mapping parameter), and corresponding polygon contour (same as bounding box). |

|

Parameter Description

|

Weight File

Parameter Description |

Specify the Grounding DINO model weight file (usually in .pth format) for detection. A valid model file must be selected. Available models include Swin-T and Swin-B. Official models can be downloaded from specific paths. |

Parameter Adjustment |

Choose a model that matches your task requirements and hardware capabilities. The Swin-B model is usually larger with potentially higher accuracy but slower speed; the Swin-T model is relatively smaller and faster. |

Enable GPU

Parameter Description |

Choose whether to use GPU for model inference computation. If checked, ensure the computer has a compatible NVIDIA graphics card and corresponding CUDA environment. Default is off. |

Parameter Adjustment |

Checking this option can significantly improve processing speed. If there’s no compatible GPU or insufficient VRAM, this should be unchecked. |

BERT Language Model Path

Parameter Description |

Specify the path to the local BERT language model folder. |

Parameter Adjustment |

The operator depends on the BERT language model. If the environment allows access to the internal model server, no manual configuration is needed - the software will automatically download. If access is unavailable, you need to manually download bert-base-uncased.zip and extract it, then specify the extracted path in the initialization parameters. BERT language model download addresses: Subsequent runs: Once the model has been successfully loaded once (automatically or manually), this parameter usually doesn’t need to be set again unless the model file is moved or deleted. |

Prompt Sentence

Parameter Description |

Input English words or sentences for guiding detection. The model will attempt to find objects in the image that match this description. This parameter must be provided. |

Parameter Adjustment |

Use clear, specific English descriptions. If you want to detect multiple different object categories, you can separate different prompt words/phrases with English periods or commas. For example: "chair . table . person" or "red car, blue bike". The quality of prompts directly affects detection performance. |

Category Mapping

Parameter Description |

Map detected objects corresponding to prompts to numeric category IDs you specify (integers starting from 0). This is very useful for subsequent filtering or processing based on categories. |

Parameter Adjustment |

Input a series of numbers separated by English periods or commas. These numbers will correspond in order to each prompt separated by delimiters in "Prompt Sentence". For example, if the prompts are "cat . dog . bird", the category mapping can be "0 . 1 . 2", so detected cats will be labeled as category 0, dogs as category 1, and birds as category 2. If this parameter is empty, or the number of provided mappings is less than the number of prompts, the operator will automatically assign category IDs 0, 1, 2, … in order. Ensure the number of mappings is at least equal to the number of prompts. |

Detection Box Confidence Threshold

Parameter Description |

Bounding box confidence score threshold for filtering detection results. Only detection boxes with scores above this threshold will be retained. |

Parameter Adjustment |

Increasing this value will result in fewer detection results, keeping only targets the model is very confident about, reducing false positives. Decreasing this value will get more detection results, possibly including some less confident targets, but may also increase false positives. Adjust according to actual performance. |

Parameter Range |

[0,1], Default: 0.3 |

Detection Category Confidence Threshold

Parameter Description |

Text-image matching confidence score threshold for filtering detection results. It measures the degree of matching between detected regions and corresponding text prompts. |

Parameter Adjustment |

Increasing this value requires higher semantic matching between detection results and text prompts, helping filter out some results that have high detection box confidence but are not very relevant to the prompt words. Decreasing this value allows results with slightly lower matching degrees to pass. |

Parameter Range |

[0, 1], Default: 0.25 |